UXR | UX | UI | PM | AI

During the hackathon, I played multiple roles to drive the team’s success. As a product manager, I ensured smooth collaboration by preparing project descriptions, creating organizational files, and facilitating team discussions. I managed time, split and assigned tasks, and led brainstorming sessions to keep us aligned and productive. I also facilitated user research: I created a survey and translated it into English and Spanish, which our teammates shared with participants. On a hackathon day, we analyzed the results to refine and pivot our product idea in the right direction. As a product designer, I developed user personas, prototypes, designed the final presentation, and co-presented our solution.

October - November 2024

Oscar Nogueda

Polina Vinnikova

Roxana Juarez

Valeria Tafoya

David Colonel

Figma design and prototyping

Google Forms user research

Canva presentation deck

User research

Information architecture

Product Management

Visual design

Data Analysis

Technical Flow Mapping

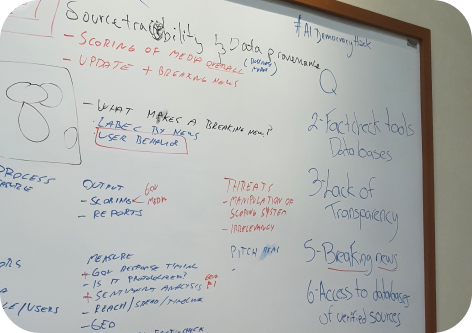

The task was to develop a solution for source traceability and data provenance, specifically exploring how AI could verify the credibility of data by tracing the origins of content and its dissemination points.

The solution needed to align with the hackathon assessment criteria:

Before the hackathon, our team decided to create a scoring and certification system, similar to Twitter's Blue Checkmark, designed to evaluate the credibility of journalists, articles, and media outlets. This system would be based on source traceability and data provenance to ensure trustworthiness. However, after analyzing user research results during the hackathon, we discovered a critical challenge: journalists and fact-checkers struggle to assess breaking news trustworthiness in real time. This is because when breaking news occurs, the speed of reporting is crucial, but fact-checking tools and databases often lack the necessary data immediately. To address this issue, we pivoted our focus to solving this problem, asking: How might we help journalists and fact-checkers assess breaking news in real time? This allowed us to adapt our original concept to meet the urgent needs identified through user feedback.

Events like political shifts, crises, and disasters need reliable coverage.

These are the hardest to verify in real time.

Misinformation spreads quickly without timely checks.

Public trust declines with lack of transparency.

The project operated under significant constraints:

UXR

Before the hackathon, following our pre-hackathon meeting where we discussed potential project ideas, we decided to validate them with our target users: fact-checkers and journalists. I created a survey with a mix of multiple-choice, open-ended, and closed questions, and translated it into Spanish.

We distributed the survey to journalists and fact-checkers from Mexican media outlets, like Animal Político. We received 8 responses, which highlighted the primary challenges faced by our users.

On the day of the hackathon, we analyzed these insights and identified the main issue: assessing the credibility of breaking news in real time. Based on this feedback, we pivoted from our initial idea of a scoring and certification system to developing a service specifically designed to assess the credibility of breaking news.

Users struggle to confirm the accuracy of breaking news as it unfolds due to a lack of reliable real-time tools.

Users find it difficult to trust media sources when there is no clear visibility into how information is verified and reported.

Users need reliable and easily accessible databases to cross-check information and identify trustworthy sources quickly.

THE DESIGN GOAL

IDEATION AND PRIORITIZATION

To address the challenge of assessing breaking news credibility in real time, we analyzed the key issues faced by fact-checkers and journalists.

Breaking news spreads rapidly, with numerous sources often publishing conflicting information.

Existing fact-checking tools, such as Google Fact Check, cannot verify news during events due to the lack of immediate data, as they rely on post-event analysis.

Recognizing this gap, we decided to leverage retroactive data to create a hybrid system that combines human and AI efforts. This approach allows us to assess credibility in real time, providing users with immediate evaluations.

DESIGN AND PROTOTYPING

We prioritized leveraging visuals to make the information accessible and intuitive. While the primary audience is fact-checkers and journalists, the service also needs to be easy to understand for regular users without a specific background.

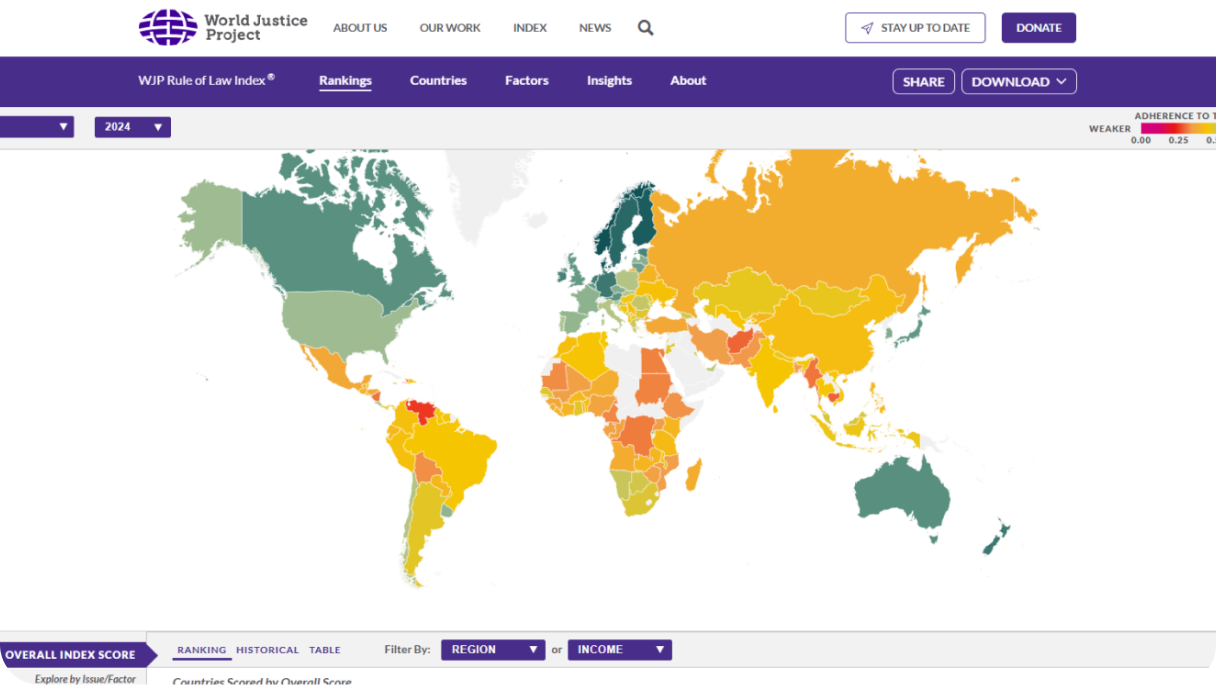

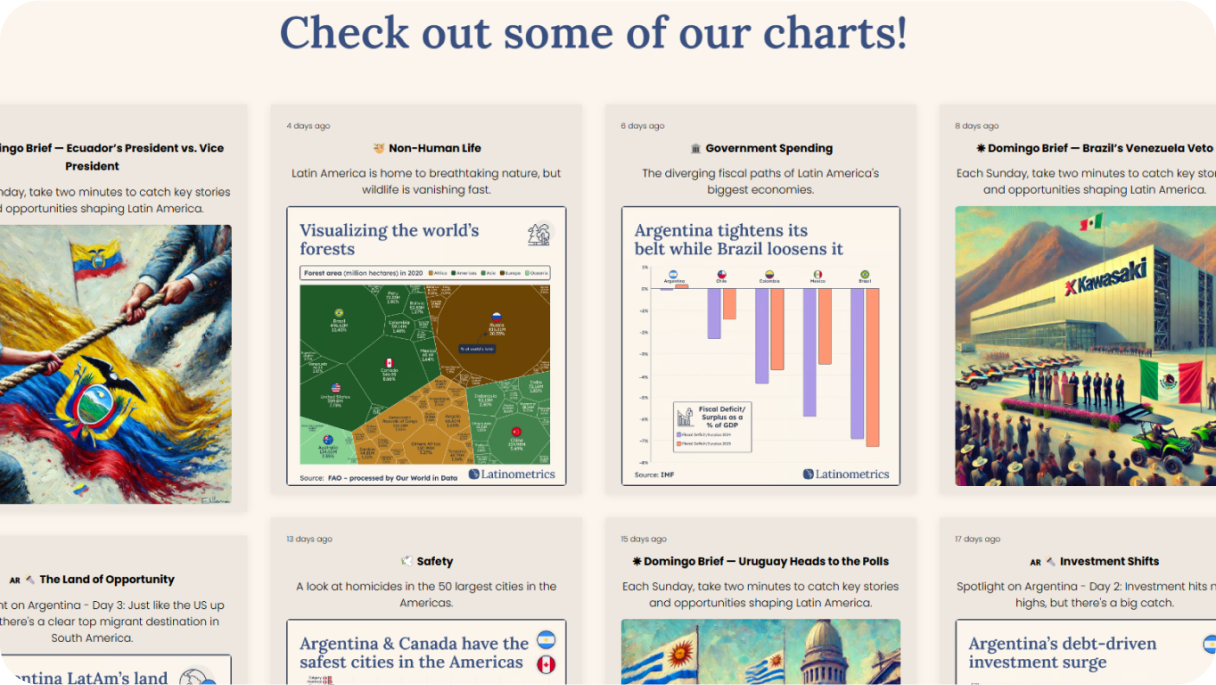

To achieve this, the homepage features visual elements and possibly a learning component to highlight key data insights for general users. We drew inspiration from platforms like World Justice Map and Latino Metrics, which present data in a clear and universally comprehensible way.

For fact-checkers and journalists, we designed a streamlined dashboard where they can easily select or exclude sources and view scores through intuitive visualizations.

This balance ensures the service is both user-friendly and professional, catering to the needs of all audiences.

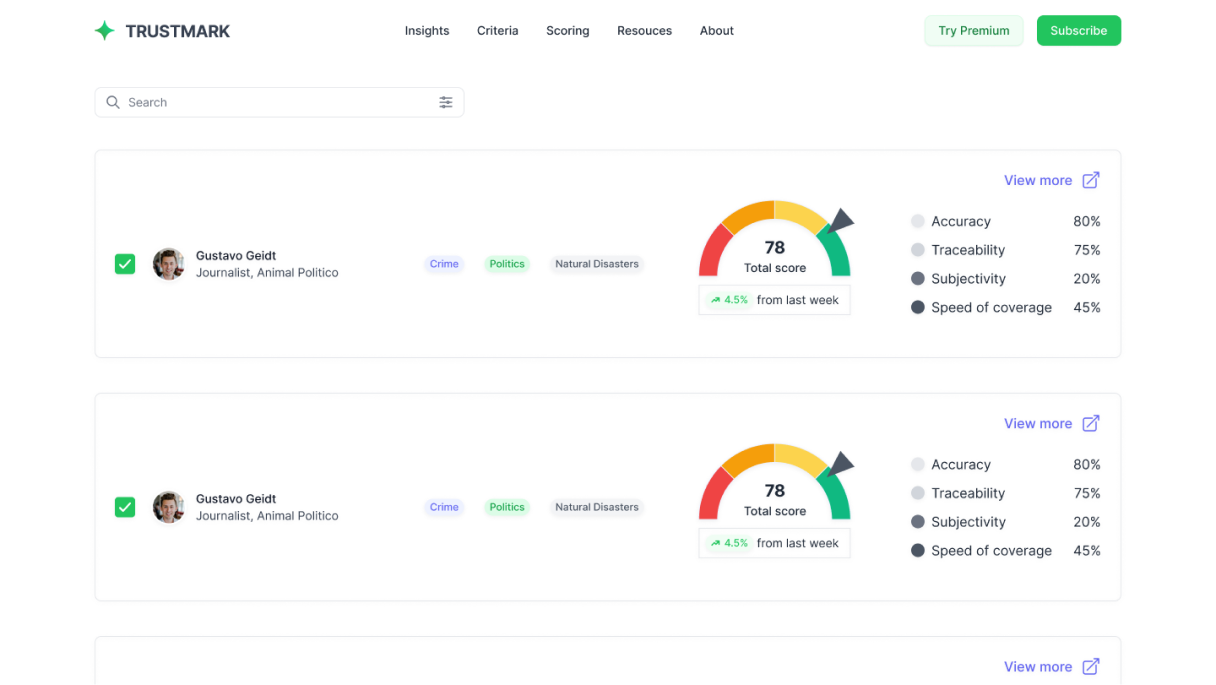

A comprehensive list of sources with a quick summary of each,

including their overall real-time score and the categories used for

assessment. Users can filter sources by topics, such as crime or

politics, and click on a source to access its detailed individual

page.

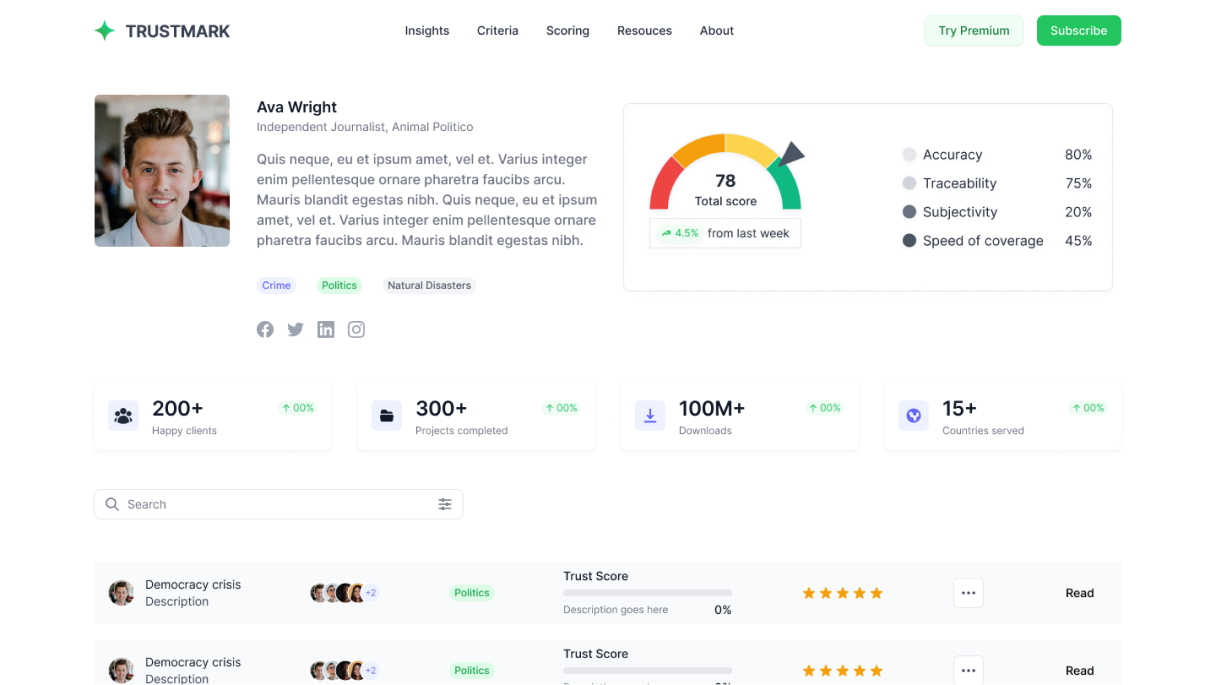

A detailed page featuring a short description, overall score with its dynamics compared to the previous period, category-based score breakdown, assessment criteria for improvement, overall statistics (e.g., number of articles and publishing history), and a list of specific articles with their rank, score, and key details.

THE SOLUTION

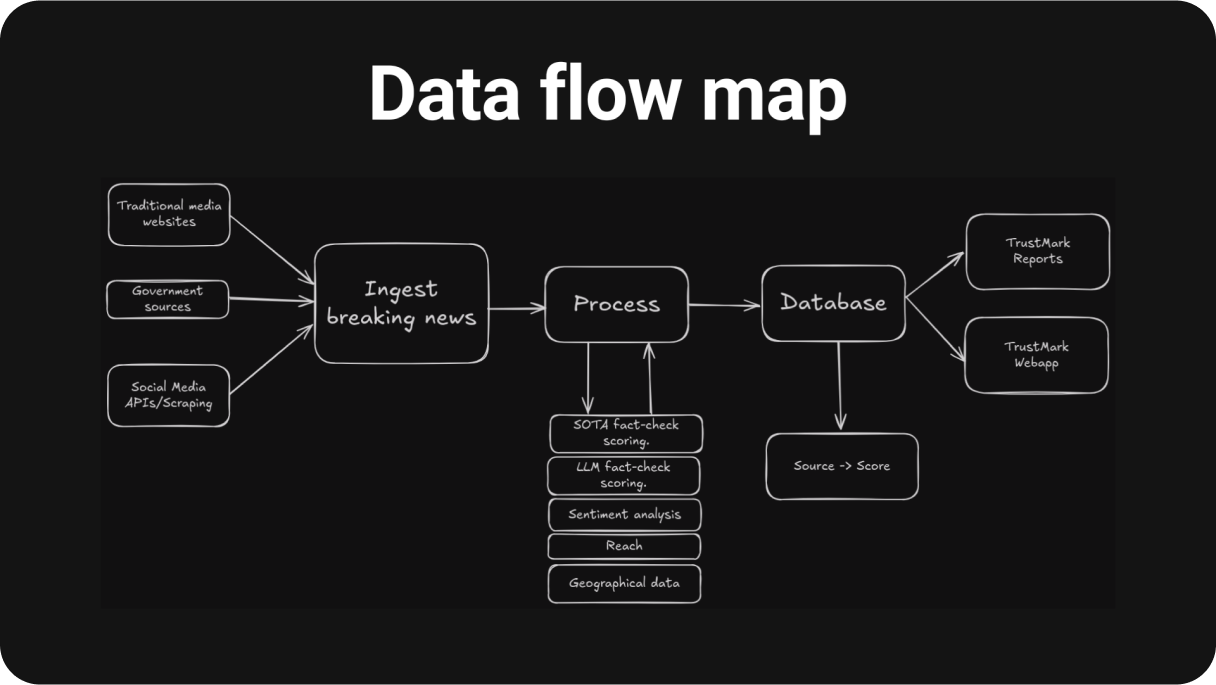

By analyzing historical data, we can trace how specific media outlets, journalists, or sources have covered breaking news in the past. This database forms the foundation for identifying patterns of credible reporting.

Using the database, human fact-checkers establish criteria for accurate reporting, such as reliability, speed, and traceability. These criteria are then used to train AI models to identify trustworthy coverage patterns.

The trained AI models assess breaking news in real time by applying the established criteria and comparing it against the database of past trustworthy sources and patterns.

The AI assigns a credibility score to breaking news coverage, offering users immediate insights into the reliability of the information they consume.

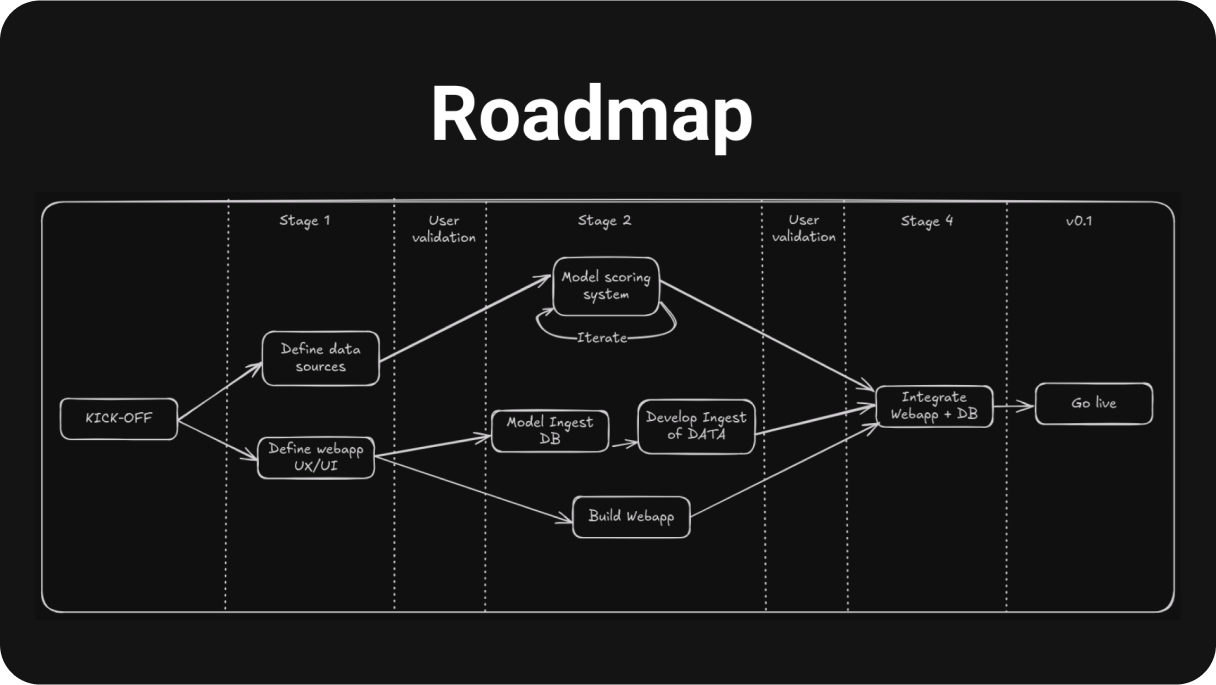

TECHNICAL SPECIFICATIONS AND FUTURE DEVELOPMENT

IMPACT AND OUTCOMES

We didn’t make it into the top three projects that were recognized. Though, to be honest, there wasn’t much to win besides the title. But is that what success really looks like? To me, not at all.

The real success of this project was how we collaborated as a team. Everyone was deeply involved, we worked together seamlessly, and we built a proof of concept that we were proud to present. The entire team was excited about our solution, and some of us — myself included — would love to continue working on it if funding becomes available. So, if you’re an investor looking for your next big startup, feel free to reach out. I’ve got a pretty cool project to share with you.

LEARNINGS

What I learned from this project was eye-opening. The biggest insight — and honestly the most surprising one — was realizing that we were the only team (thanks to me as the product designer) that created our prototype in Figma. Every other team used AI tools for quick idea generation. At first, I was a little smug about it — I even mocked their messy-looking prototypes. But by the end, I started to question who really had the better approach: I spent so much time in Figma, trying to make everything look good, while they focused on speed and efficiency. That’s when it hit me: the era has changed. In hackathons and in business, especially in fast-paced environments, ideas and time are everything, and I need to adapt.

This really sank in during the post-hackathon celebration. One of the team leads from a recognized project told me about his background as a founder and a designer with a degree in graphic design. I jokingly asked why he used AI tools instead of designing himself, and he said, “Because it’s faster.” That moment stuck with me. It was a tough but necessary wake-up call.

Another lesson came from reflecting on why our project wasn’t recognized. First, our focus was very niche: fact-checkers. The judges may not have fully understood the need or the problem we were solving. Another reason is that our solution was complex. Its consequences, like improving the ability to fact-check breaking news credibility, would only become clear in the future. Right now, it’s hard for people to see how such a solution could impact the entire media ecosystem and, ultimately, public trust in the media.

We approached a big, complex problem, and maybe it was too ambitious for an event like this. But honestly, I don’t think we would’ve done anything differently. I still believe this is a strong solution, and it’s worth pursuing. Maybe next time, we’ll find a better way to frame it or focus on a smaller, more tangible piece.

Spending too much time on polished prototypes can be a disadvantage in fast-paced environments where speed and idea execution matter most.

Targeting a specific audience, like fact-checkers, can make it harder for others, including judges, to see the broader impact of the solution.

Solutions addressing large, systemic issues may be difficult to grasp quickly, highlighting the need to simplify and communicate ideas effectively.